In only 20 years, robot assistants have moved out of science fiction and into many home and work environments. The trend is partially driven by innovative robot and artificial intelligence (AI) researchers like those in Rice University’s Kavraki Lab. In addition to their own research, these computer scientists also develop and support open source tools for the broader motion planning community.

“The general problem we saw and wanted to address is the lack of standardization when it comes to evaluating new motion planning algorithms,” said Constantinos Chamzas, one of the creators of MotionBenchMaker.

Chamzas brainstormed the issue with another Kavraki Lab researcher, Carlos Quintero-Peña.

Quintero-Peña said, “In robotics, there is a plethora of motion planning algorithms and many active efforts to develop even more efficient ones. Everyone’s perspective —and thus, how they approach the very challenging motion planning problem— is different. We each want to share our discoveries with other motion planning specialists, but how can we compare algorithms? Is ours more effective or more efficient than existing solutions?

“Everyone has been creating their own ad-hoc problems for benchmarking. Providing a standard set of problems for benchmarking can save researchers time, eliminate bias, and provide a basis for comparison against other planners. Several motion planning testbeds were available, but each had been designed for a specific type of motion planner and thus had limited usefulness. We envisioned a more robust set of tools that could be used for testing motion planners across a broader spectrum.”

Chamzas and Quintero-Peña first tested their benchmark standardization theory by reaching out to colleagues working on manipulators in other facilities and universities, sharing their ideas and asking for feedback. They also contacted several authors of other motion planning standardization tools, to determine if existing testbeds could be merged into a common dataset.

Collaborating with robotics colleagues at Rice, the University of Wisconsin-Madison, and the Technische Universität Berlin, Chamzas and Quintero-Peña organized efforts to publish an introductory paper and develop the open source platform, which was published at the Robotics and Automation Letters (RAL) and presented at the 2022 International Conference on Robotics and Automation (ICRA).

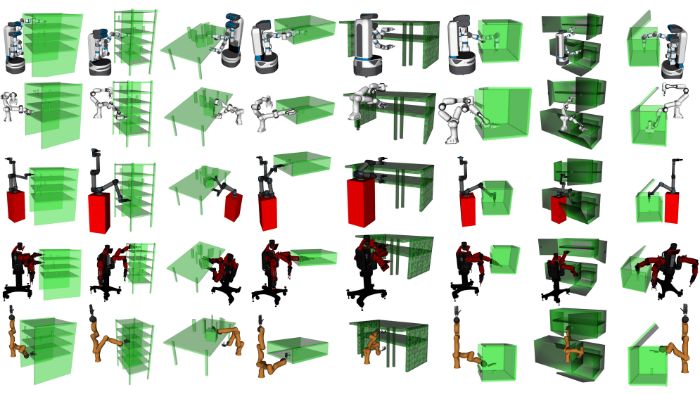

“MotionBenchMaker is a tool that can be used to procedurally generate datasets for a fair and unbiased evaluation of planners,” said Chamzas. “To accelerate motion planning research —by streamlining and standardizing the test phase— we included 40 prefabricated datasets with five different but commonly-used robots in eight environments.

“And although we launched with a very rich dataset, no one on the team considers it the gold standard. Many of the tests came out of our team’s own motion planning problems, but the planning community and its projects are very diverse. So any researcher who wants to use MotionBenchMaker can modify it to fit their own experiments. We built in the ability for users to create their own datasets within the tool as well as upload their own datasets to MotionBenchMaker to extend the applicability of the tool. It is very modular and flexible.”

Chamzas said he needed a tool such as MotionBenchMaker because his own research strives to help specific planner operations work better in new and challenging environments. His tests required a lot of different motion planning datasets. Other researchers are pushing boundaries with new motion planners for more dexterous robotic hands and even dual manipulators. Their tests are done on limited datasets making it difficult to evaluate new methods.

Quintero-Peña’s interests lie in planning safe motions for robots in unstructured environments, where the robot may have to decide and act with incomplete information. Testing his own solutions revealed the need for a more standardized way to compare and track his results.

“MotionBenchMaker helps establish some common ground for basic ideas. Clearly the tool does not serve the needs of all motion planning researchers, and we understand it may be biased because of the perspectives we and our colleagues bring to the table. But for motion planning researchers in similar areas, this is a solid tool for comparing new algorithms,” said Quintero-Peña.

He said the last decade of robotics and AI research has seen great advancements in many areas including new models, new datasets, and new environments. Robots used in homes and warehouses experience very different scenes and results, which leads researchers to develop very different algorithms for those tasks.

Quintero-Peña said, “In research, you need space for people to think and create on their own. Supplying a foundation - common ground with common datasets and a common comparison platform - doesn’t limit creativity. MotionBenchMaker sets up a baseline; motion planning scientists still have room to imagine more or different solutions.

“We recognize that our tool won’t be applicable to all motion planners, but we believe MotionBenchMaker will shine when people both use it and contribute to it.”

Unlike other standardized datasets that lacked on-going support to maintain their compatibility with advances in the field, MotionBenchMaker is set up for long-term success as an open source tool because its founders fully understand their significant and continued time commitment.

Over the past years, Chamzas and Quintero-Peña have helped support another open source tool developed in the Kavraki Lab, the Open Motion Planning Library (OMPL). The library, which was designed to be easily integrated into a wide variety of systems, consists of many state-of-the-art sampling-based motion planning algorithms. OMPL serves as a standard collection of planners and MotionBenchMaker can serve as a collection of problems to test and compare these planners on.

Chamzas said, “The OMPL is a big library that has been widely adopted by industry and the academic community. Thousands of users depend on it to develop new planners or use the existing ones for real world applications, and the Kavraki Lab is dedicated to its continued support. That same spirit of community service is driving MotionBenchMaker. That means many people in our lab —including researchers who move on to other organizations after graduating or completing their postdocs— have committed to spending a lot of their free time to support MotionBenchMaker.”

Quintero-Peña added, “We will continue adding functionality, datasets, environments, and robots. Having the community use it and grow it will help improve its usefulness. Every time a new motion planning algorithm or paper on this kind of problem is published, if those authors have used MotionBenchMaker to compare their algorithms, that will add to its success.

“We have support from the IEEE and from professors and students around the world, but our original goal was to provide an easy-to-use process for evaluating and thoughtfully comparing motion planning algorithms for our broader research community. The ICRA audience response to our ideas about standardization was very positive." The team also presented MotionBenchMaker in a fall workshop as part of the open-source robotics conference —ROSCon— in Kyoto and the 2022 International Conference on Intelligent Robots and Systems (IROS).

Lydia Kavraki, the Noah Harding Professor of Computer Science and supervisor of this work, said the open source concept of the tool resonates with her overall philosophy and she is proud of the team’s efforts to produce and support the platform with its robust set of tools and a path to evaluation standardization for the broader motion planning research community.

Kavraki said, “Our lab has been at the forefront of motion planning research for the past two decades. We strive to produce robust motion planners that are suitable for manipulators and high-dimensional robots. Our work with OMPL has helped our entire community move forward. This new tool, MotionBenchMaker, adds incredible value by systematizing the benchmarking of motion planners. It is a sign of a maturing field when rigorous benchmarking is adopted by the community. Our work will help evaluate traditional algorithms, but it will also help in the generation of datasets for learning algorithms and the evaluation of learning-based approaches for motion planning.”

Constantinos Chamzas is a Computer Science Ph.D. candidate, co-advised by Lydia Kavraki and Anshumali Shrivastava. He matriculated at Rice University in 2017, after completing his undergraduate work in Electrical and Computer Engineering at Aristotle University of Thessanoliki.

Carlos Quintero-Peña received a Fulbright Scholarship in 2019 to begin his Ph.D. work with Lydia Kavraki. He completed his M.Sc. in Electrical and Computer Engineering at the Universidad de los Andes in 2011 and continued his research while teaching Electronics Engineering, Robotics, and Machine Learning at his alma mater and at the Universidad Santo Tomás.

Zachary Kingston, a postdoctoral research associate also contributed to the paper. He completed his Ph.D. in Computer Science at Rice University in 2021 under the direction of Lydia Kavraki.