For 25 years, the International Conference on Supercomputing (ICS) has been showcasing research results in high-performance computing systems. It is one of the premier events for supercomputer scientists like Rice University professor John Mellor-Crummey, whose research group presented two papers at the 2022 conference.

“Our group’s current focus is on building software tools that support performance measurement and analysis for the Department of Energy’s emerging exascale supercomputers,” said Mellor-Crummey. “Each exascale computer will be capable of performing 1.5 quintillion (1018) calculations per second —colloquially, that’s ‘a billion billion’ operations per second.

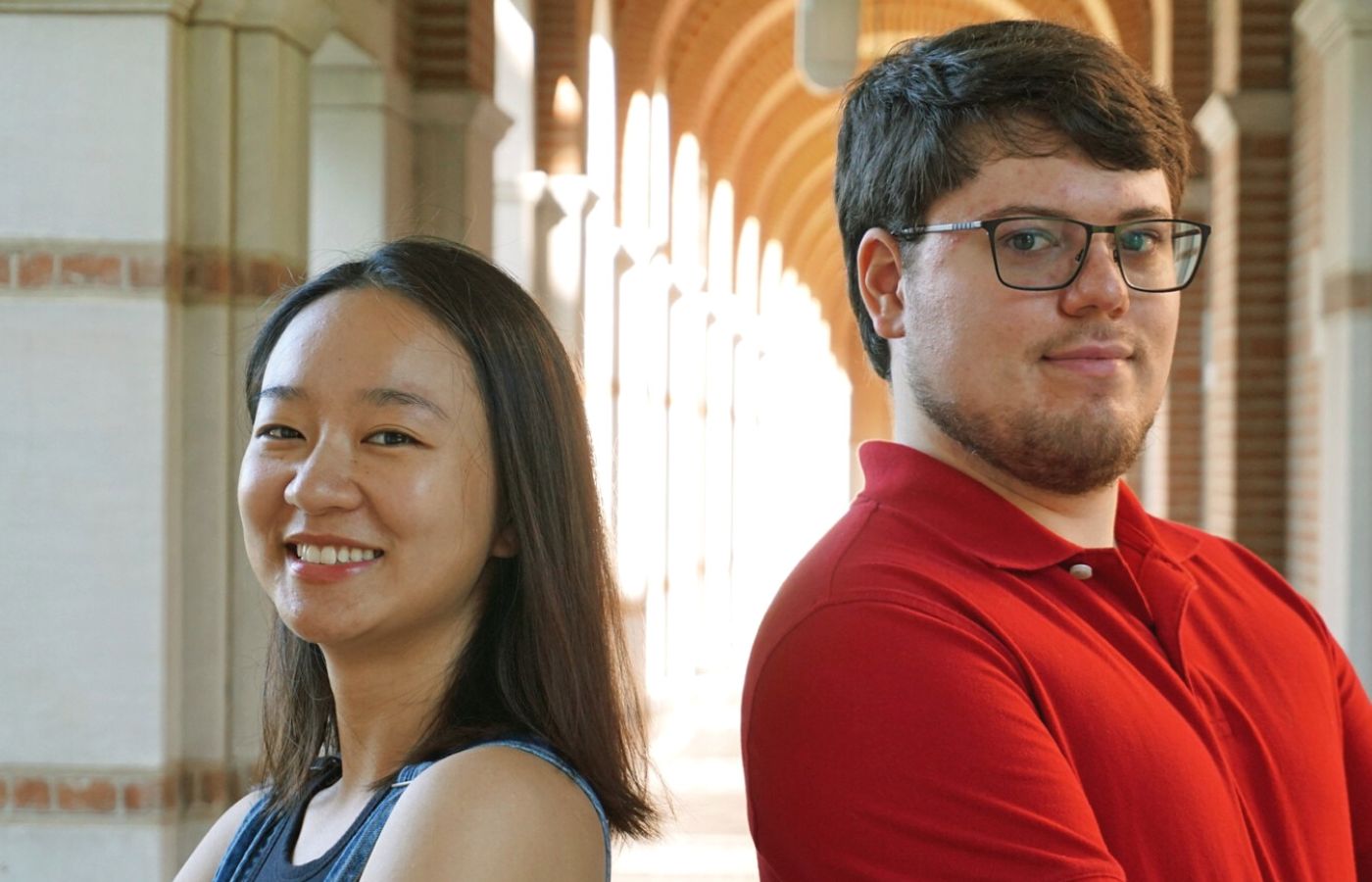

“One of our papers, presented at the ICS by Jonathon Anderson and Yumeng Liu, documents our preparations to analyze application performance on exascale computers. The other ICS paper focuses on techniques to measure the performance of computations on Graphics Processing Unit (GPU) accelerators, in detail and with low overhead. The Department of Energy’s exascale computers will depend on GPUs for over 95% of their computing power.”

Before collaborating on the exascale computer performance tuning project, Anderson and Liu had approached Mellor-Crummey’s research group from different directions. Liu wanted to help software engineers make their programs run more effectively and efficiently. Anderson was fascinated by the firehose-like outpouring of data produced by high performance computers.

His interest in the growing field of big data was matched by Anderson’s appreciation of parallel computing which was expanding as supercomputers transitioned from conventional processors to GPUs.

“Central processing units (CPUs) drive most of our daily machines including computers, phones, and even Roombas,” said Anderson. “They are everywhere, in everything, and they do their jobs very well. CPUs perform their work by focusing on only one job at a time.”

“Graphics processing units (GPUs) have a different design that works on multiple data elements at the same time - in parallel - to display graphics across a screen. And it turns out that this kind of parallel computing has applications for physics problems such as weather simulation.”

"From a grid of a few million points on a part of the ocean, scientists can determine weather patterns. But understanding weather over a tiny section of the ocean isn’t very helpful. Scientists need to simulate weather patterns across the entire globe, at the same time. Back in the early days of big data processing, scientists turned to CPUs to tackle this kind of huge processing problem. However, GPUs yield a higher performance output with less energy requirements than CPUs, so GPUs are now driving parallel computations in all of the largest supercomputers.”

His research colleague, Liu, was not thinking in terms of supercomputers when she discovered her passion for research as an undergraduate student.

She said, “After spending one summer researching with my advisor, I knew I wanted to do more. I had already realized that creating my own applications was less interesting to me than helping other programmers improve theirs. To do that, I would need to better understand how hardware and middleware worked. The beauty of John’s group is that I can continue my research, learn more about middleware and hardware, and help improve the performance of other engineers’ programs.”

Today, the programs her research will aid most are those used to process big data on supercomputers driven by GPUs. Liu said that measuring the execution of programs generates a lot of useful data but the current nature of GPU processing means a lot of useless measurements accumulate as a by-product.

“Sparsity is the term used to indicate low density in the data,” said Liu. “If 98% of your data output is zeroes then your data density is 2%. For researchers measuring application performance on supercomputers, that 2% of non-zero measurement data is full of useful information. However, analyzing and storing lots of zeroes takes a lot of cycles and space, resources that are not then available to the other scientists who want to run jobs on the machines. With our enhancements, we can successfully reduce - by over 1000x - the space required for processing performance data for big jobs."

Liu uses a supply chain analogy to illustrate their solution for the meaningless data storage issue. She said if an empty warehouse can hold up to 10,000 boxes and a truck arrives with 12,000 boxes, the unloading job cannot be completed. In this analogy, jobs that cannot be completed are not begun. If a truck with 9,000 boxes arrives, the unloading job is completed but leaves only a 1,000-box quota for another job. Following the warehouse analogy, the performance enhancement solution Liu and Anderson devised condenses the extra space in and around the boxes —without damaging the contents— so that only space for about 7 boxes is required for that 9,000 box job.

In addition to dealing with the storage of surplus zeroes, Liu and Anderson also tackled the problem of quickly analyzing performance measurements for large jobs. In addition to saving space, their solution decreases the number of cycles —which increases processing speed— needed to analyze performance measurements of large jobs by avoiding analysis of extraneous zeroes without losing any accuracy.

“My interest in parallel computing is what prompted me to enroll in one of John’s graduate level courses,” said Anderson. “And that course led me to John’s research team, where I worked with him and another researcher on quickly analyzing machine code for applications, which is needed to attribute performance losses to programs. With that problem solved, the next step was to improve analysis of performance measurements on exascale computers.

“John, Yumeng and I began working on parallel analysis of performance data while exascale computers were still being designed and built. Since the performance analysis challenges we were working on are already present on today’s supercomputers, we were able to test our solutions on one of them —Perlmutter at Lawrence Berkeley National Laboratory. Perlmutter is a forerunner to Frontier, the world's first exascale computer. Frontier was launched at Oak Ridge at the end of May and we expect our solution to work just as well on Frontier as Perlmutter.”

Several exascale supercomputers are being built by the Department of Energy to help solve some of the most complex scientific research questions and challenges facing the world, including the creation of more realistic Earth system and climate models. Exascale computer simulations and data analyses will also be used to help gain insights needed to design fusion power plants and create new materials at the nanoscale.

Anderson said he is excited by the range of possible applications for exascale computers, and his own contribution feels a little like that of a machinist supporting the engine crew for a race car.