For both humans and their robot assistants, solving a new problem from scratch takes more time and energy than remembering and adapting a previous solution. If robots can improve their recall accuracy when faced with new situations, their effectiveness will increase.

Constantinos Chamzas, one of the researchers in Rice University’s Kavraki Lab, presented his research paper on improved recall methodology at the International Conference on Robotics and Automation (ICRA). He calls it FIRE — Fast retrIeval of Relevant Experiences.

“The idea of FIRE begins with a robot that solves a specific task, perhaps moving objects around a bookshelf in an office environment,” said Chamzas.

“The robot picks up an object and places it, then picks up a second object and places it. It spends its lifetime doing these same tasks, or maybe it selects items from a bucket or places objects in different positions — but the problems the robot solves all have this similarity.

“Now move the robot to a kitchen environment. Some of the new tasks will be similar to the actions already in its library of experiences. Everything in the new environment may be unique, but with minor adjustments the same experiences apply. Inspired by the ways humans can use their past memories to solve similar problems, FIRE tries to do something similar.”

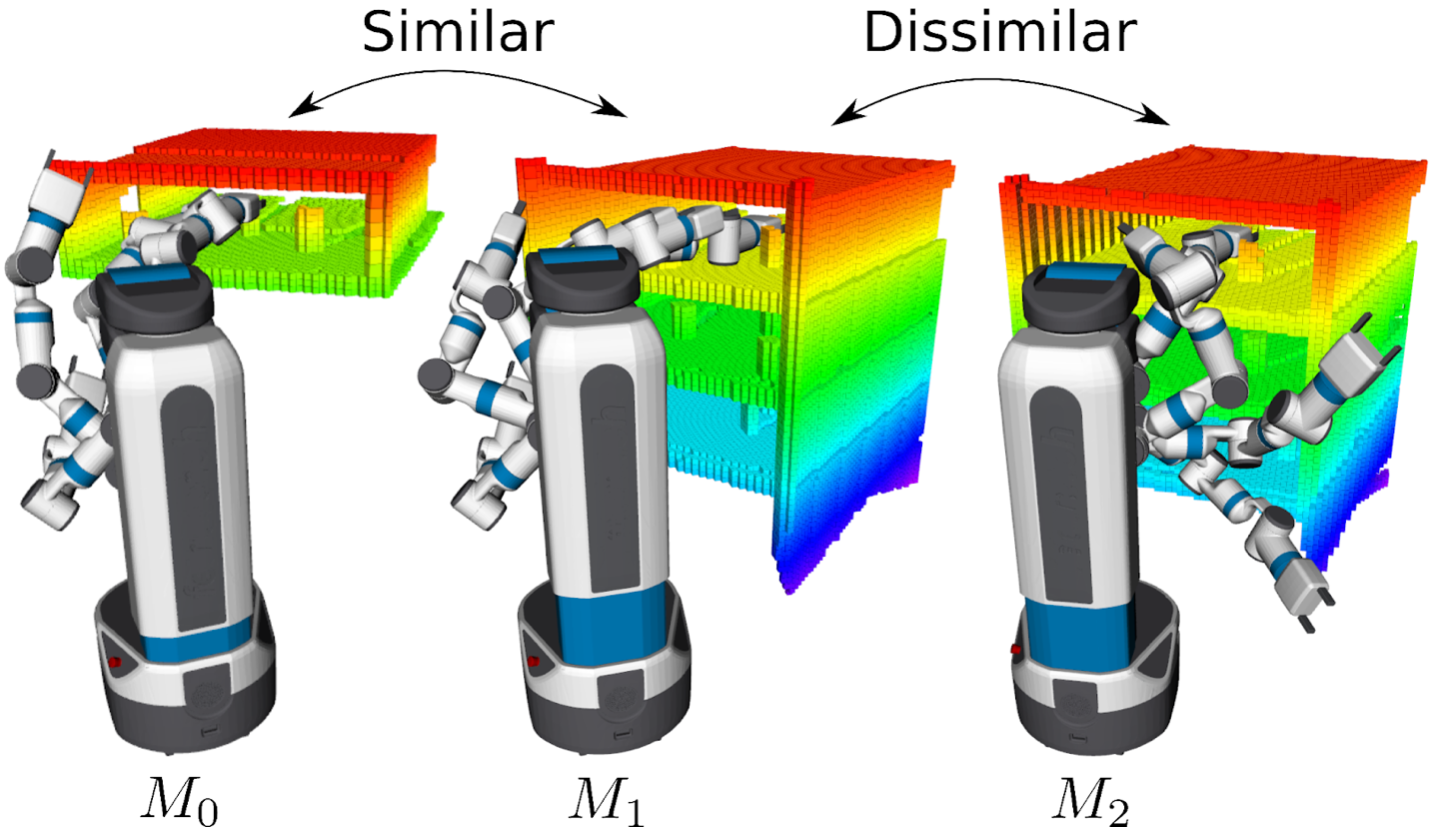

Chamzas’ earlier research demonstrated his robot’s ability to find prior experiences, but identifying those that were similar or dissimilar would take more work. He discovered that some actions which may not appear visually similar to a human are in fact more closely related when it comes to the basic actions a robot takes to accomplish the tasks. Examples of such actions are noted in his video and shown in the illustration below.

“The environments for reaching into an office bookshelf and reaching into a kitchen cabinet may look different, but the solutions are similar. We can take parts of one action and apply them to the other,” said Chamzas.

“On the other hand, a small change in the environment may significantly impact a task while appearing visually similar. For example, when the robot is attempting to pick up a mug, the mug’s orientation matters a lot. Based on where the mug’s handle is, the robot may reach from the left side or the right side. If the mug is in an awkward position, the robot may need a series of more complicated motions.

“Although the mug positions may appear to be similar, the actions to pick it up will be different. Our research uses a robust dataset of motion planning actions so that robots can better search for experiences that are similar.”

To determine which motion planning problems are similar, Chamzas and his co-authors leveraged a concept from machine learning: Siamese neural networks. They first developed an algorithm to find problems with similar solutions in a new dataset, then trained their system to find those and other similarities by feeding the data through a Siamese network like those used to detect fraudulent signatures and for facial recognition.

“A Siamese network helps explain how systems learn,” said Chamzas. “Technically, two identical neural networks use identical processes on two different inputs to determine if they are similar or different. Say we want to create a facial recognition system and we begin with many views of three people: me, Lydia, and Aedan.

“Now we add a new person to the database, and we have only one photo of this person. Are there any basic characteristics that are similar to the examples in the existing set? Our research uses this same concept, but we are comparing motion planning problems instead of faces,” Chamzas said.

A Siamese network does not actually compare images. First the visualizations are converted to data points and the data points become inputs for the network’s similarity or difference analysis. Because Siamese networks are set up to compare data inputs, Chamzas and his colleagues developed a way to designate robot motion planning problems as the data input.

He said, “We added more data points to our dataset so when a new challenge is presented, the robot can quickly search the collection of motion planning problems for similar experiences. Even if the robot has been trained in a kitchen, it will be able to apply its previous experiences to placing objects in an office bookshelf.

“Placing or moving objects around obstacles on a table requires a different set of motions, but there are some general similarities in the experience; our program helps the robot discover the commonalities between the two. It’s like asking, ‘Have I solved this before?’ Well no, but here is a problem that is similar. With FIRE, we can continue adding experiences or solutions for the robot to remember and copy.”

With Chamzas’ dual research interests in robotics and machine learning, it is no surprise that FIRE yields more sophisticated results than the hand-crafted similarity functions typically included with motion planning solutions. Like several of his previous papers, the FIRE research melds his experiences with both of his co-advisors, Lydia Kavraki and Anshumali Shrivastava.

“I applied to Rice’s Ph.D. program hoping to find a niche that combined machine learning and robotics. My main focus is on robotics, and that is why I wanted to work with Professor Kavraki. When I arrived at Rice and had a chance to talk with her about my interests, she connected me with Anshu and he’s been my co-advisor ever since,” said Chamzas. “Lydia Kavraki is an ideal role model for researchers who may be pulled by diverse interests.”

The Kavraki Lab spans computational robotics, artificial intelligence, and biomedicine. Kavraki is the Noah Harding Professor of Computer Science, and also professor of Bioengineering, professor of Electrical and Computer Engineering, professor of Mechanical Engineering, and director of the Ken Kennedy Institute.

“Constantinos’ interest in machine learning was a solid match for his research in robotics,” said Kavraki. “It is a big challenge in robotics right now to understand what are the advances that can be achieved with the use of machine learning. Constantinos’ work sheds light in that direction.”

Constantinos Chamzas is a Computer Science Ph.D. candidate, co-advised by Lydia Kavraki and Anshumali Shrivastava. He matriculated at Rice University in 2017, after completing his undergraduate work in Electrical and Computer Engineering at Aristotle University of Thessanoliki.

Aedan Cullen, who contributed to the paper, matriculated in 2019 to pursue a B.S. in Computer Science. He joined the Kavraki Lab as a sophomore and is currently an intern in the Platform Architecture group at Apple.