Computer Scientists from Rice University have mathematically demonstrated that applied genetic research has unknowingly come close to finding the theoretical lower bound of data required to make meaningful genetic insights.

The problem their research addresses, said Bryce Kille, a computer science graduate student for whom the work served as a capstone to his PhD, has to do with lowering the amount of compute memory needed to analyze ever-growing genomic databases while meeting certain constraints. This means sampling representative portions of a genome, which takes far less processing power than comparing entire genomes. The research considers “the question of how much memory you really need to sample parts of the genome” while still meeting certain guarantees, he said.

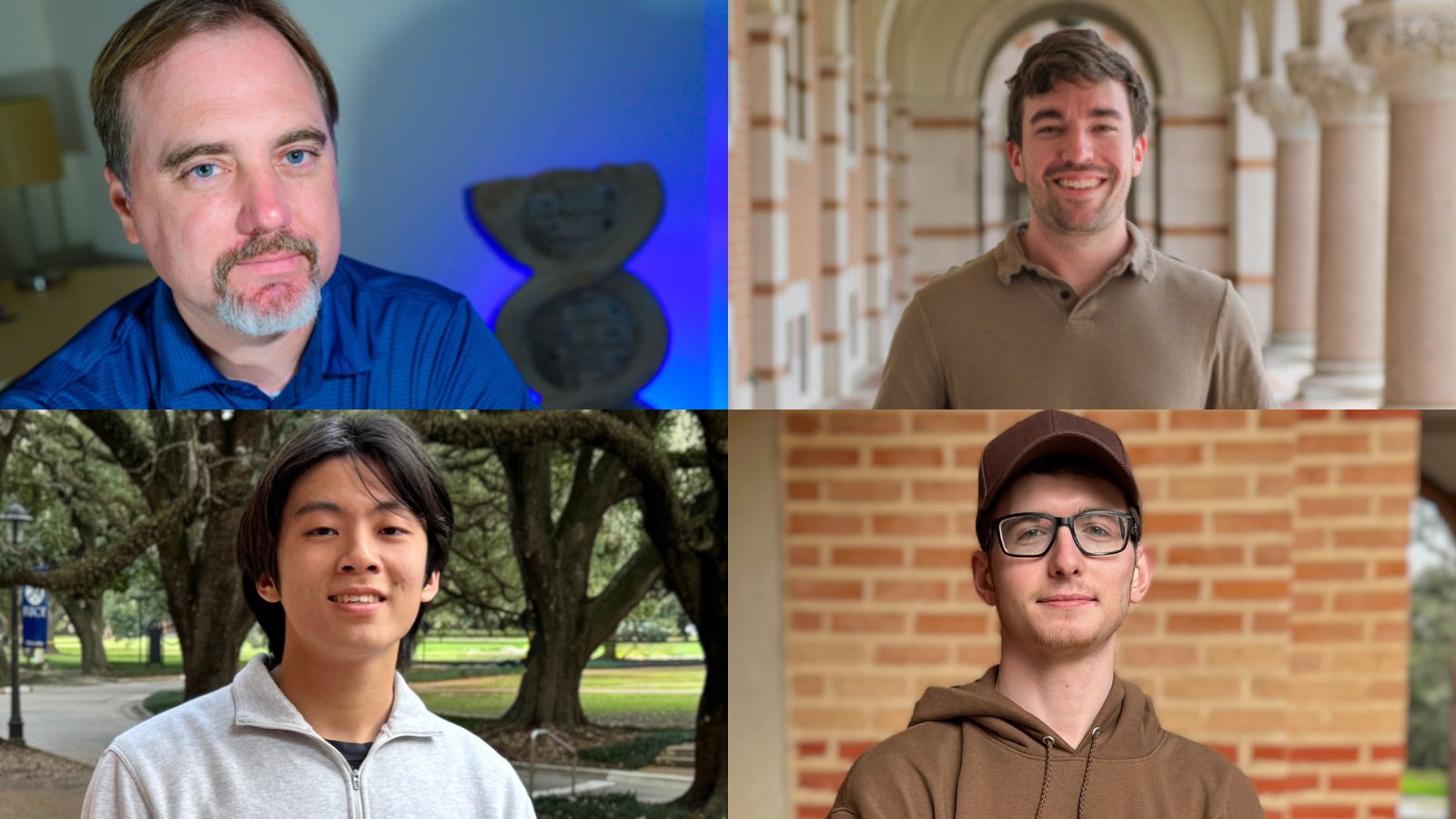

"A near-tight lower bound on the density of forward sampling schemes," appears in the January 2025 issue of the journal Bioinformatics, published by Oxford University Press. Kille and Ragnar Groot Koerkamp (from ETH Zurich) were co-first authors of the work. Rice undergraduates Alan Liu and Drake McAdams were coauthors. Todd Treangen, Rice Associate Professor of Computer Science and AI2Health cluster lead (Ken Kennedy Institute), was senior author of the study.

Exponential growth of genetic data

The motivation for Treangen and Kille’s research “really comes from the recent deluge of genomic data,” Treangen said.

“When I started my PhD, we only had a handful of genomes in publicly available databases. High-throughput sequencers–technology that enabled us to efficiently and affordably read the genomic information of species on our planet–had not yet arrived,” he explained. “In the early 2000s, cost and time prohibited genomic data from being available in the databases.”

“Fast forward all the way to Bryce's PhD,” Treangen continued. “The landscape has totally changed. Today, we are now regularly comparing from 10,000 to 100,000 genomes of a single species.” Researchers’ initial focus on what questions they could answer with the data they had shifted to “how do we scale our methods up to even start to help answer biologically relevant research questions?” he said.

Faced with a magnitude of data, scientists developed algorithms that use representative sequences of large amounts of genetic data to analyze datasets. K-mer sampling is one such scheme; it’s an algorithm that compares sampled DNA sequences of length k within much larger sequences to enable fruitful research at scale.

For the last decade, to improve speed and efficiency, researchers have been trying to find algorithms to sample k-mers from large sequences under the constraints that 1) at least one k-mer is sampled from any sub-sequence of w consecutive k-mers, and 2) if two sub-sequences of w consecutive k-mers are identical, the algorithm will sample the same k-mer for both sub-sequences. In particular, the challenge is to satisfy these constraints while sampling as few k-mers as possible. This is where Treangan and Kille’s research fits in.

“Massive amounts of genomic data is what caused people to start using k-mer sampling schemes in the first place. But as that's grown over the last 10 years, that spurred people to make better k-mer sampling schemes,” Kille explained.

“The only way to work practically” with terabytes of data “is to down-sample it, and we want to down-sample it in the best way possible,” he said. “People have been trying to write better down-sampling methods, but without knowing what the best down-sampling method is.” Which is where the lower bound comes in.

Significance of lower density and lower bound

Known sampling schemes achieve certain densities–i.e., given the w and k parameters, researchers know what proportion of k-mers an existing scheme will select from a sequence, Kille said. These densities function as an upper bound of what’s possible. “On the other hand, there's something called a lower bound, which is purely theoretical,” he added. “The whole point of the lower bound is to say we have a formula that can guarantee, essentially, that you can't obtain a density lower than this number. It doesn't say this density is achievable. It's saying, we know you can't do better than this.”

Between the upper and lower bounds is a gray area, Kille said, where “we don't know if we can actually achieve metrics that are lower than existing schemes, but higher than the lower bound. There's been over a decade of research trying to decrease this metric.”

“The fewer k-mers we select while still satisfying these constraints, the smaller and faster the data structure becomes,” said co-first author Groot Koerkamp. “In this paper, we did the opposite: proving that given the constraints, one has to select at least so many k-mers. Initially, this may sound like a 'negative' result. But in practice, now that a good lower bound is known, we have a clear goal to work toward.”

Theoretical results showed applied research was already close to the lower bound

“People are almost always focused on the applied side as opposed to the theoretical side” of sampling schemes, Kille said. It is “relatively rare to see new theory come out without a direct application.” Usually, the goal of this research is “having a new method, whereas our goal has no new method. The theory is really the result.” He said, “we realized we don't need to be practical. Theory is actually fantastic to begin with.”

“There are scientists who have invested a lot of effort over the last five to six years, a tremendous amount of research activity” devoted to better down-sampling, Treangen said, but “some had an inaccurate impression of how much more they could actually down-sample. Bryce’s work showed that they were pretty close to already getting to the optimal value…. if you keep working on this problem, you're going to get a 5% savings at most when you previously thought that could be a 50% savings.”

The primary results of the research demonstrate “a heavily improved understanding of how low we could go. And that's going to help allow us to focus our efforts on other problems,” Treangen said.

“Now we know that all the effort got really close to what is optimal without even knowing it,” agreed Kille. “It helps inform people whether or not they want to spend more time trying to optimize this, knowing that there's actually not too much more density you can squeeze out of it.”

Practical applications of research

This work is useful not only for validating that researchers were already close to the lower bound, but also “lets them know what’s possible,” said Kille, and helps them “direct or divert” their research accordingly.

Another reason scientists are interested in efficient k-mer sampling is cost. “Saving 10% of memory might not seem like too big of a deal. It's not a huge number,” Kille said. “But these sampling schemes are used nearly everywhere in genomics. If you can save 10% of memory for a k-mer sampling scheme, you're saving 10% memory across the whole world of comparative genomics applications. For people who have to pay for compute costs on servers, saving memory translates to dollar signs. These small incremental changes add up.”

Undergrads become coauthors in a serendipitous collaboration

The question of density in k-mer sampling had been in the back of his head for a while, Kille said, and “we had a couple undergraduates who wanted to do some summer research.”

He “boiled it down to pure math” for undergraduate interns Alan Liu and Drake McAdams. “Todd and I thought it was a good problem to not necessarily solve but make some progress toward, and it would be fun,” Kille remembered.

Kille’s chance meeting at a conference brought another collaborator onboard: Groot Koerkamp, a computational biologist earning his PhD at ETH Zurich.

“We started talking about this stuff,” said Kille, and “the odds of finding somebody looking at a problem like this at the same time are rare. We were working in parallel, and it turns out we had really good complementary results, and by combining them, things started snowballing really fast.”

Groot Koerkamp posted a proof of a lower bound on k-mer sampling density on his blog. Kille saw it and “reached out to say that he and his students had a draft of a paper with a very similar result,” Groot Koerkamp said. “We decided to merge our efforts. The collaboration made the project more fun, faster to complete, and with better results as well!”

“This is definitely one of the most collaborative projects I've been on,” said Kille. “When you have the right group of people, things can really just explode into results.”

He was particularly impressed with the work McAdams and Liu contributed. “It was shocking to me how much they worked on this and how much they contributed,” Kille said. “A lot of the time, I forgot that I was working with undergraduates.”

Liu, whose contributions included developing linear programs to verify lower bounds, said, “The project was an eye-opening experience and testament to how collaboration fuels success in research.”

It also made an indelible impression. “This project has greatly influenced my academic trajectory,” he said. “I am now applying to graduate programs to explore more challenges in bioinformatics and healthcare.”

McAdams wrote some of the proofs and provided conjectures, and he agreed that “It really was very collaborative. It felt very open.” His enthusiasm led him to willingly “stay up [late] when I was particularly interested in one aspect and wanted to find a good proof for it.”

Treangen was also impressed with Liu’s and McAdams’ work. “It was very unique, and a great example of the impactful research that undergrads can do at Rice,” he said. He added that undergraduate contributions to research are not uncommon, but they do not always result in co-authorship on a published paper.

Treangen leads a recently formed Office of Undergraduate Research and Inquiry (OURI) VIP-supported research team called GenomeSleuths, which is designed to provide similar experiences to Rice undergraduates who are interested in computational biology and algorithms.

“Everybody contributed to every part of the paper, which was really nice,” said Kille. “Working on what was supposed to be a fun math summer problem turned into something really useful.”

This work was supported by the National Library of Medicine Training Program in Biomedical Informatics and Data Science [T15LM007093 to Bryce Kille], the National Institute of Allergy and Infectious Diseases [P01-AI152999 to Todd Treangen], the National Science Foundation awards [IIS-2239114 and EF-2126387 to Todd Treangen], ETH Research Grant ETH-1721-1 [to Gunnar Rätsch], and the Ken Kennedy Institute at Rice.