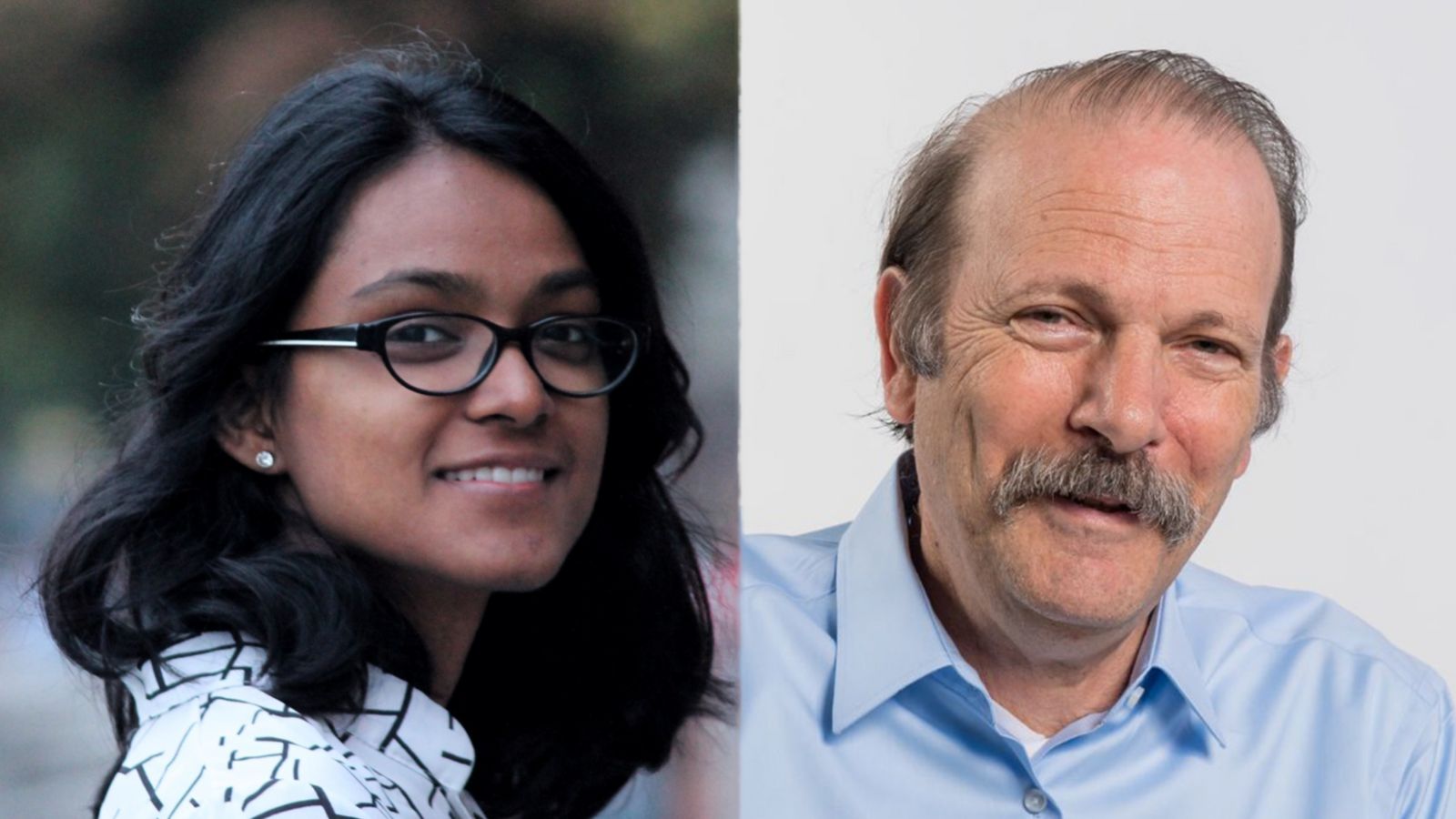

“Moshe, our best solution has an extra exponential blow-up,” said Suguman Bansal, who had recently defended her Rice University Ph.D. dissertation and joined several other computer science graduate students to explore what seemed to be straightforward model checking for Linear Temporal Logic over finite traces (LTLf).

Rice University Professor Moshe Vardi responded exactly as the team of graduate students expected. “He told us we must be doing something wrong and started to solve it on his own,” said Bansal, who is now an assistant professor in the School of Computer Science at Georgia Tech. “This is not the kind of conversation you want to have with your advisor at the end of your Ph.D. program. We were all a little worried that Moshe would rethink our qualifications.”

Instead, Vardi confirmed their initial findings at the end of the day and the team’s subsequent research was recognized as the best paper at the 2023 International Symposium on Automated Technology for Verification and Analysis (ATVA) in Singapore.

Bansal describes the broad topic of formal methods through its two cornerstones, verification and synthesis, “Verification seeks to check if a computational system is correct against a given specification. Synthesis seeks to automatically generate a system that is correct from a given specification. The specification simply describes desired properties rigorously using logic. LTLf is one such logic.”

"Prior work on LTLf had focused on the synthesis problem, including another paper we had published in AAAI 2020. But, we began to wonder how to ensure our implementation was correct. So, we began discussing the model-checking problem (a form of verification) using LTLf," said Bansal.

She added her efforts to those of then Rice CS Ph.D. students Lucas Martinelli Tabajara and Andrew Wells. Tabajara is now a formal-methods researcher with Runtime Verification Inc. and Wells is an Amazon applied scientist in the Automated-Reasoning Group. Another of their coauthors, Yong Li, had been a visiting Ph.D. student in Vardi’s group before returning to China to complete his degree. He is now a Marie Skłodowska-Curie Research Fellow at the University of Liverpool.

“Model checking is a classical approach that was introduced 40 years ago,” explained Vardi. The more prevalent literature deals with LTL (Linear Temporal Logic), which is useful for describing properties over executions of infinite length. More recently, LTLf has begun gaining traction due to real-world applications with behaviors that are often better expressed over executions of a finite but unbounded length.”

Bansal said, “The folklore among formal-methods researchers says that problems over finite-executions are at most as hard as their infinite-execution counterparts. In contrast, we observed that model-checking LTLf seemed to grow exponentially more than model-checking LTL. That was a surprising development that took us a long time to accept.”

“None of us wanted to tell Moshe we were stuck on this problem that sounded so simple. Yong had graduated a few months before me, Lucas was about to graduate, and Andrew was closing in on graduation. Having been advised by Moshe – a world expert in model checking – we thought we should be able to solve the problem. But after failing to improve the result for a long time, we finally told Moshe that while it didn’t make sense, it seemed that LTLf model checking was exponentially harder.”

Vardi responded saying it was one of the most standard of problems and it should not be harder. “If there was anything my proteges learned from me, it should have been model checking,” said Vardi. “Then…several hours later, I had to email Suguman and say that they seem to be right and LTLf model checking does seem to be exponentially harder.”

“So, Moshe tells us we may be right and that our exponentially harder upper bound may be the best we can get,” said Bansal. “He charged us to find a matching lower bound and gave us some ideas on how to go about proving such a lower bound, and we did. We found that indeed LTLf model checking is exponentially harder than LTL model checking, which can be done using polynomial-size memory. LTLf model checking, we proved, requires exponential-size memory.”

Although their findings were solid, their paper was rejected the first two times the team submitted it to a conference. After the second rejection, the team dug further into the reviewers’ comments; most were complimentary, but one really challenged them to explain why anyone would care about their research.

When they submitted a third time, they shifted their opening but changed none of their findings. Bansal said in the excitement of the result, they had lost sight of the original motivation. “Several LTLf synthesis engines exist today, to the extent that the annual synthesis competition SYNTCOMP now runs a track for LTLf. Nevertheless, there is no existing way to check if these implementations are correct. Model checking LTLf could help here. That’s the connection we made when we rewrote it for the third time.”

Their work was so clear and so surprising that the conference chairs revealed that they had not seen such high scores on any other paper.

Vardi said, “Every reviewer gave this paper their top score. Even the program chairs said there was no contest. Choosing ours as the best paper for this conference was one of the easiest decisions they’d ever made.”

Bansal was delighted with a different aspect of the work. “There is another side to this for me. On paper, I officially graduated with my Ph.D. the day I submitted my dissertation. But in spirit, I graduated when Moshe came back and told us we were right. He is the world expert in this area; there is nothing in this space that he does not know. And to stump him is my biggest victory ever,” she said with a laugh.